Grad-CAM

Unveiling the Essence with Grad-CAM: Decoding CNN Model Decision-Making

Introduction

In the realm of Convolutional Neural Networks (CNNs), the ability to interpret and understand the decision-making process is paramount. Enter Grad-CAM, a groundbreaking technique that not only enhances our understanding of CNNs but also empowers us to pinpoint the areas of focus driving model classifications. In my latest project, I harnessed the power of Grad-CAM to unravel the intricacies of my CNN model, shedding light on its decision-making dynamics.

Demystifying Grad-CAM

Gradient Class Activation Mapping, or Grad-CAM, stands at the forefront of interpretability in deep learning. This technique provides a window into the inner workings of CNNs, allowing us to visualize and understand the regions within an image that significantly influence the model's decision. Unlike traditional methods, Grad-CAM doesn't require architectural modifications, making it a versatile tool for introspective analysis across various CNN architectures.

How Grad-CAM Works

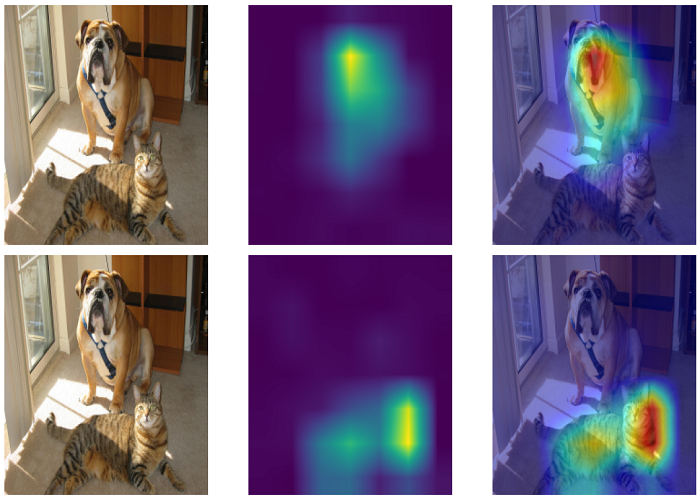

At its core, Grad-CAM leverages the gradients flowing into the final convolutional layer of a CNN. These gradients are used to weigh the importance of each feature map, highlighting the regions that contribute most to the model's decision. By generating a heatmap overlaid onto the original image, Grad-CAM effectively illustrates where the model "looks" when making a classification, offering unprecedented insights into its decision-making rationale.

Advantages of Grad-CAM

Grad-CAM's non-intrusive nature is a key advantage. It allows us to interpret CNN models without the need for retraining or architectural adjustments, preserving the model's original characteristics. This interpretability is invaluable in applications where understanding the rationale behind a model's decision is crucial, such as medical image analysis, autonomous vehicles, and beyond.

Application in My Project

In my recent project, I integrated Grad-CAM to unravel the decision-making process of my CNN model. By visualizing the salient regions in images that drove the model's classifications, I gained a deeper understanding of its behavior. This not only enhances the interpretability of the model but also opens avenues for refining its performance and ensuring more informed decision-making in classification tasks.

Conclusion

Grad-CAM stands as a beacon in the quest for transparency and interpretability in deep learning. Its application goes beyond mere visualization, offering a roadmap to understand the reasoning behind CNN model decisions. As I share the insights gained from incorporating Grad-CAM into my project, I invite you to explore this powerful technique and unlock a new dimension in comprehending the decision-making prowess of CNNs.

My Python script is available on GitHub.

Here is the output of Grad-CAM on our beans Sorter CNN classification project: